Experimentation + KPIs: How Product Managers launch the right features, faster

Optimizely’s Full Stack enables product teams to improve their visitors’ experience in a quicker, easier, and safer way than the typical product development process by providing the tools to experiment, optimize and rollout new features to ensure value is delivered to your customers. No matter what part of Full Stack you are using, you will

Optimizely’s Full Stack enables product teams to improve their visitors’ experience in a quicker, easier, and safer way than the typical product development process by providing the tools to experiment, optimize and rollout new features to ensure value is delivered to your customers. No matter what part of Full Stack you are using, you will need to have a measurement plan to determine if the improvements you make are actually useful and enjoyable to your visitors.

Over the past few years as Optimizely’s Lead Strategy Consultant, I’ve seen the best product teams follow a decision policy for analysis when using Full Stack, which I’ll outline below. Because doing Full Stack testing typically involves a wider array of metrics, having an upfront view on what success will be amongst those metrics will increase time to decision making and in turn velocity (the number of experiments run). It also can ensure you are more consistently measuring and aligning your experiment success back to your top line goals.

To start, it’s important to understand the tools in your product development toolkit that can be used via Full Stack. Knowing these differences upfront has helped teams determine unique measurement plans.

- A/B Test – A/B testing is a method of comparing two versions (or more) of a page or app screen against each other to determine which one performs better. You can assign metrics as you would in Optimizely’s Web product and take full advantage of Stats Engine.

- Feature Test – A Feature Test is delivered much like an A/B test, but is built upon a Feature in Optimizely and takes advantage of feature variables. Same as an A/B Test, you have the ability to assign metrics such as click-through rates, purchases, and revenue while leveraging Stats Engine to make decisions.

- Feature Flag / Rollout – Feature Flags allow you to build conditional feature branches into code in order to make logic available only to certain groups of users at a time. The rollout enables you to a feature flag for a set of users: a percentage of users, a particular audience, or both. You do not have the ability to assign metrics. Rollouts are used for launching features, so no impressions are sent and no extra network traffic is generated.

- Multi-Armed Bandit – A multi-armed bandit is an optimization play on an A/B test that uses machine learning algorithms to dynamically allocate traffic to variations that are performing well, while allocating less traffic to variations that are underperforming. You can assign metrics of any type just like an A/B Test or a Feature Test. However, instead of statistical significance, the multi-armed bandit results page focuses on improvement over equal allocation as its primary summary of your optimization’s performance. These can only be used on one of the two test types, and not a Feature Rollout.

As a product owner you are going to use these sequentially and often rather quickly back-to-back. You may be building a new interactive form feature that you use a Feature Flag to control only exposing it to your beta user group. Once early signals are strong, you can roll it out to a larger set of users past your beta group to validate its performance impact. If no performance impacts are found you can now shift to true experimentation in a Feature Test with different field types, presentations, etc. And over time you may leverage Multi-Armed Bandits to optimize the form lead generations during a marketing campaign. Consider how your decision policies may shift depending on a scenario like this one!

Most organizations have a “single source of truth” when it comes to measurement across its products and experiences. Optimizely is no different. We encourage teams to leverage Optimizely in conjunction with that platform such as Amplitude or an internal data warehouse. What is key is to set a success plan for measurement prior to launch of a test or a feature between your mix of analytics platforms.

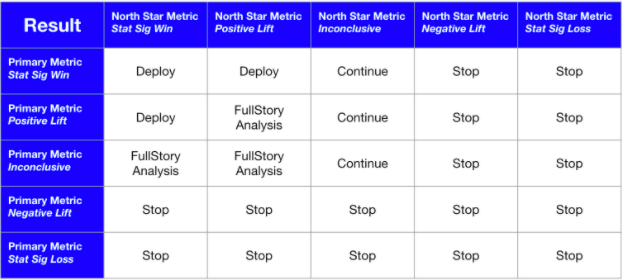

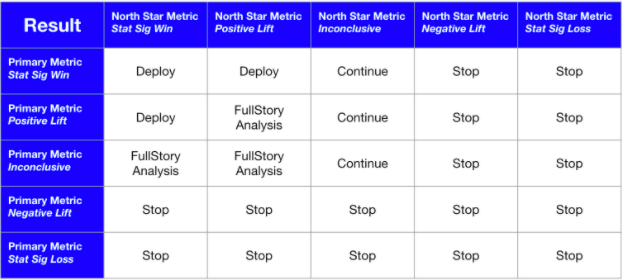

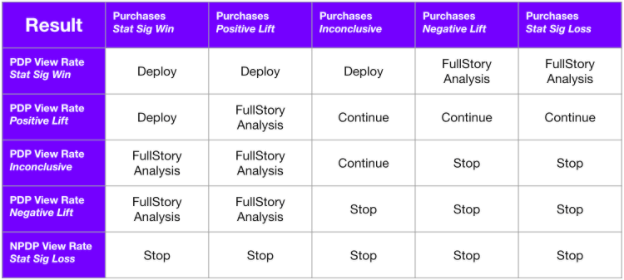

Below is an example (downloadable template here) of how you might approach this across everything you do in Optimizely’s Full Stack in balance with your internal data warehouse ( “source of truth”). Notice that calling tests winners only happens when there are statistically significant wins on the north star metric and a positive lift on the primary metric (and the inverse).

Some organizations have a set standard, such a specific Key Performance Metric (KPI) that must be impacted positively, for everything they do in Optimizely (as the above is).

Often, however, the KPI is set per test or feature launch. For a test it may look like this:

Hypothesis: If we return on-site search results ordered by the most clicked on results (as “Most Popular”) as opposed to Newest, then we will increase product view rate and in turn purchase completion rate.

We also recommend leveraging a digital experience analytics platform like FullStory to help guide your decision-making when the metrics you’re tracking haven’t reached stat sig or are inconclusive. This type of tool will give you deeper insight into how users are engaging with a variation to help you further validate your results. As you can see in the images above, we rely on FullStory analyses under particular pre-defined circumstances.

When launching a feature using an Optimizely roll out, you are likely introducing different metrics to measure than when you launch a test that considers impact to the product past purely optimization (such as performance). Some of those metrics for performance testing could be things like change failure rate and time to restore service. These types of metrics may not make it to your decision policy regarding success of the feature, but they should! For example, are you willing to trade a significant increase in form completions if it means a non-significant increase in failure rate. These are the scenarios you should have a point-of-view on before pressing start on your experiment.

Why would you decide to set your KPI at a program level as opposed to for each launch within Optimizely? You may do this at a program level if you’re only leveraging a single part of Optimizely Full Stack to start (e.g. Rollouts). Maybe you’re only using Optimizely’s A/B Test feature so the organization can create an agreed upon view across the board. Or the organization may already have a set way that it works on analysis with technology partners and there’s a clear line already established.

More often than not (whether it be program-level or case-by-case) organizations tend to draw the line on where analysis in Optimizely stops at their most important north star metrics. These can be metrics like revenue, lifetime value, bookings, lead score, etc. Optimizely’s Services team has extensive experience creating these data pipelines and measurement practices wherever that line is drawn. And the evaluation of what other metrics are measured within Optimizely are on a case-by-case basis. What are some decision points?

- Which metrics are most important to have confident read on as opposed to doing more exploratory analysis?

- What data definitions are familiar to our team members / leadership as we socialize to make decisions on these type of changes?

- Do we even have the ability to institute these metrics in our other analytics platforms in time for our launch in Full Stack?

A lot of this is going to get better and easier though! Optimizely is working on a capability (Data Lab), which will “break Optimizely’s results pipeline into interchangeable components for collecting data, measuring metrics, applying statistics and building and sharing reports” as our co-founder Pete Koomen noted. At the end of the day, we want to make it easier for teams to do their analysis on experiments and features in a quicker and more relevant way to their business. Data Lab will unlock this.

If you’re interested in learning more about Data Lab please reach out to us! Or if you’re currently a customer of Optimizely you can contact your Customer Success Manager directly.